UUIDs are generally uncompressable. This is because their values can be anything and multiple UUIDs generaly don't repeat in a payload. So you may be surprised to learn that a JSON list of UUIDs compresses to 56% of their original size. Here's an example:

[

"572585559c5c4278b6947144d010dad3",

"b97ec0750f67468d964519e18000ccf6",

"c1c69970b9bc4c0a9266a3350a9b2ab1",

"2eb10805db484db7a196f89882d32142",

"4b3569aaac154195aaa5ea114eff6566",

"e253f04c1f414b7291e682ea465e8e19"

]

Now we compress it

>>> import uuid

>>> import gzip

>>> jdata = open('data.json').read()

>>> len(jdata)

216

>>> len(gzip.compress(jdata))

159

>>> 159 / 216

0.7361111111111112

Hmm that's not what I said. What if we repeat the procedure with a list of 20 UUIDs?

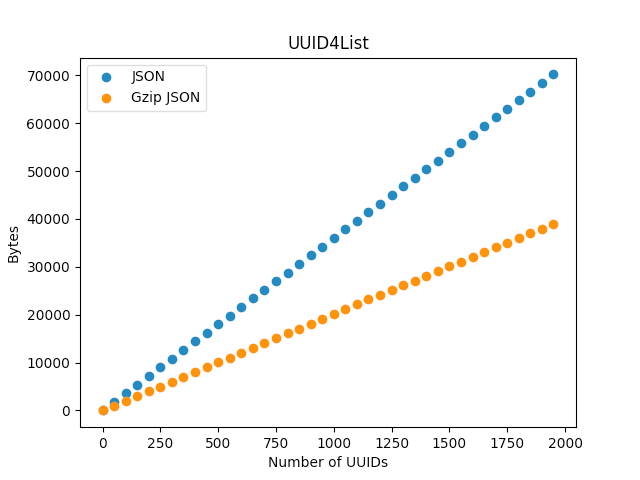

58% Not bad. We can of course automate this by comparing data for an increasing number of UUIDs.

The data looks linear, so we can model it which gives us the following slopes.

| Format | Additional Bytes / N |

|---|---|

| Json | 36.0 |

| Gzip | 20.0 |

And a data compression ratio of 1.8.

Wait, but how?

You may be wondering how UUIDs could compress to nearly half their size. The answer isn't profound, it just depends on an assumption you probably overlooked:

The string representation of a UUID is wasteful. Each UUID is 32 hex digits. There are 16 possible Hex values (2^4 or 4 bits). And since each character requires at minimum 1 byte, we end up using 32 bytes for 16 bytes of information. So when it comes time to compress the IDs it's no wonder we get compression ratio of nearly 2.